ComnetCo Blog

Optimizing a Goldilocks AI Compute Infrastructure

Segment 1. Optimized Networking

In a world rife with hype, hand-waving and broad brushstrokes about AI, it would appear you can easily accelerate innovation and reduce costs with a pre-built computer architecture. In the real world, however, buying and deploying an HPC system to accelerate AI model training is not that simple. Yes, it would be nice to find a system on the shelf somewhere that meets your needs—one that supports your users with enough power and within your budget—with a scalable, cost-effective, and modular architecture. However, the number of users you need to support is surely different from the requirements of enterprise or research institutions down the road. Or, for example, if your teams are under pressure to train AI models quickly, you need a robust computing infrastructure with high-performance hardware, fast networking and storage interconnects, and optimized software frameworks.

In other words, to do your work efficiently and stay within our budget, what you really need is a compute infrastructure that is neither under-provisioned nor overprovisioned. Rather, you need a system right sized and optimized to meet your unique requirements. So how does your organization purchase and deploy such a Goldilocks HPC system? Architecting an AI compute infrastructure with just the right mix of power and capabilities—one that won’t gobble up more electricity for processing and cooling than you can afford—requires more than simply getting your hands on a wheelbarrow full of state-of-the-art GPUs. It comprises an immensely complex undertaking that generally requires working with experienced partners.

Fortunately, Hewlett Packard Enterprise (HPE) has amassed unparalleled expertise through years of delivering powerful IT systems for enterprises and supercomputers for researchers. Add to this the wealth of technology assets and expertise gained in acquisitions such as Silicon Graphics and Cray Research and you see why HPE is a leader in HPC used by researchers and engineers to speed time to insight in everywhere from drug discovery and medicine to energy to aerospace.

Architecting an AI compute infrastructure with just the right mix of power and capabilities—one that won’t gobble up more electricity for processing and cooling than you can afford—requires more than simply getting your hands on a wheelbarrow full of state-of-the-art GPUs.

In the case of Cray, this institutional knowhow extends all the way back to 1964, when Seymour Cray designed the world’s first supercomputer, the CDC 6600. In 1972 Cray founded Cray Research where he led a small team of engineers in Chippewa Falls, Wisconsin that developed the Cray-1. That supercomputer ranked as the world’s fastest system from 1976 to 1982. A masterpiece of engineering, the Cray-1 rewrote compute technology from processing to cooling to packaging. Cray-1 systems were widely used in government and university laboratories for large-scale scientific applications, such as simulating complex physical phenomena.

Since acquiring Cray in 2019, HPE has invested heavily in research at Chippewa Falls, which has resulted in record-breaking innovations, such as the world’s first exascale supercomputers. Once considered theoretically impossible to develop or power, exascale systems can perform over a quintillion (1,000,000,000,000,000,000) calculations per second, or 1 exaflop. A Cray exascale AI system built for the US Department of Energy’s Lawrence Livermore National Laboratory in California zooms through calculations at 1.72 exaflops, making it the fastest computer on the planet. In fact, only HPE has successfully built exascale systems, and those Cray EX supercomputers hold the three top spots on the Top500 list.

In addition to assimilating and enhancing the world-class technologies acquired in the SGI acquisition, HPE has invested heavily in continuing this legacy of innovation. Today, HPE continues to lead advances in AI for supercomputing, which includes developing HPE Cray EX systems that are purpose-built for AI workloads. For one instance, in collaboration with Intel, HPE designed Aurora, an HPE Cray supercomputer purpose-built to efficiently handle AI workloads for Argonne National Laboratories, Aurora is the second exascale system designed and delivered by HPE, and it also ranks as the world’s third-fastest supercomputer.

As one example of innovation that helps deliver optimized AI systems, HPE is at the bleeding-edge of technologies like Direct Liquid Cooling (DLC), which is a hot topic with the density of today’s system on chip (SoCs) devices producing incredible amounts of heat. For example, the widely used NVIDIA H200 GPU is manufactured on a cutting-edge 4 nm process node to pack 80 billion transistors onto a single sliver of silicon. Just one of these GPUs when running at full speed can consume up to 700 watts of power, which generates significant heat. If you have eight H200s in a server, that’s 5,600 watts, which pushes the limits of traditional air cooling techniques. To address this challenge, HPE has pioneered new DLC technologies as the most effective way to cool next-generation AI systems. In 2024, HPE introduced a 100% fanless direct liquid cooling system architecture for large-scale AI deployments, which reduces cooling power required per server blade by 37%, lowering electricity costs, carbon production, and data center fan noise.

AI workloads have pushed the limits of air cooling, which is why Aurora uses direct liquid cooling to dissipate the heat generated by powerful Intel blades.

Fitting together the pieces of an optimized AI compute system

A custom-tuned system begins with the customer’s spec, but getting a system that meets your AI needs up and running requires more than partnering with a leading maker like HPE. It also requires trusted experts who can help to navigate the entire deployment process—from pre-sales scoping studies to deployment to after-deployment support. This includes right-sizing a system for your needs — because not everyone needs or can afford a massively powerful machine like Aurora.

As an HPE Gold Partner, ComnetCo provides that expertise, derived from 20 years of experience deploying systems ranging from some of the world’s fastest supercomputers to smaller systems that speed time-to-insight at leading universities, engineering departments, and teaching hospitals.

This expertise includes navigating the vast range of solutions available from HPE and other partners such as Nvidia’s ConnectX InfiniBand SmartNICs, which deliver industry-leading performance and efficiency for AI systems. Fast memory linked by fast networking is also key to efficient AI performance as the machine must sometimes access many billions of data points in LLMs with lightning speed.

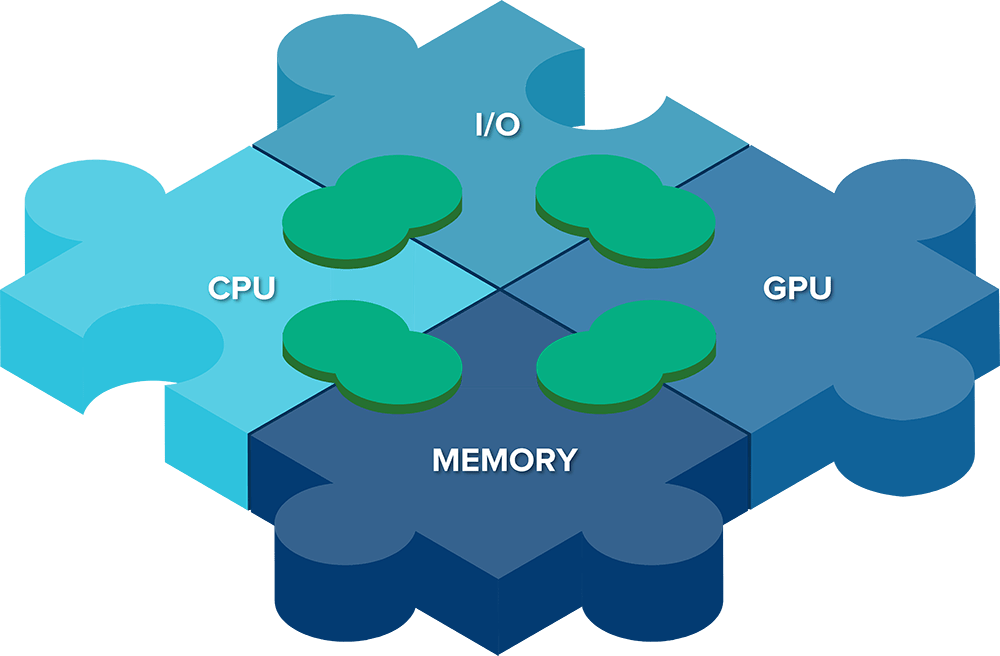

ComnetCo also provides expertise in linking the various components of an AI system—e.g. I/O, memory CPUs, and GPUs—using open industry standards, which is an important aspect of an efficient architecture that avoids vendor lock-in. This also involves working with other partners to source more than compute power but also things like software and networking equipment such as Nvidia’s state-of-the-art NICs (ComnetCo is an Nvidia Elite Partner).

ComnetCo uses decades of experience to link the various pieces of an AI compute system, using open standards as the glue.

Decisions, decisions, decisions

In short, network optimization involves an incredible number of decisions, involving everything from network speed to network density (NICs per node) to cable lengths. In making these design choices, ComnetCo experts weigh the trade-offs associated with each decision. For example, in what cases is it worth cutting down to four NICs rather than eight. Should your system’s InfiniBand (IB) run at 200Gb/s vs. 400Gb/s. Or, for another example, could rearranging nodes and switches within rack layouts help to achieve the lowest cost of cabling because cables past a certain length need to be optical, which are significantly more expensive than copper. What’s more, speed and density are generally determined by AI workload type and size, while the optimization of cable lengths requires an understanding of your unique environment to frame out the maximum number of nodes per rack, and then arrange them in the most cost-effective way.

In short, network optimization involves an incredible number of decisions, involving everything from network speed to network density (NICs per node) to cable lengths.

By carefully balancing these main network considerations is one way in which ComnetCo experts optimize a system’s networking, which allows for strategic cost-cutting to maximize money spent on compute vs. other cluster components. That is, optimizing your AI compute system and its networking means more bang for the buck.